Meaningful, Effective and Equitable Assignments and Exams

Prior to creating an assignment, define the following:

- Goals for the assignment (what outcomes do you aim to measure?)

- The level of your students (what do they already know?)

- What do your students need to know? (Do they understand what you are assigning?)

Additional considerations include effectiveness, equity, academic integrity, validity, reliability, and authenticity.

A valid assessment is one that measures what is supposed to be measured and provides accurate information about students’ mastery of the intended learning objectives or outcomes. An assessment that includes vaguely written questions may not be valid because the questions would measure students’ ability to guess the instructor’s intent as much as it measures their mastery of the content. Validity is the most critical characteristic because the decisions that are made based on assessments (e.g., grades, instruction, curriculum) rely on their being true measurements of achievement.

A reliable assessment consistently yields the same results under identical circumstances. When designing an assessment, it is important to establish explicit performance criteria to ensure that students' performances would be consistently evaluated the same way on different occasions and by different evaluators (of similar qualifications). For example, essays that are graded without explicit performance criteria may be evaluated differently by different evaluators and essays of similar quality may be inconsistently judged by the same evaluator. In this case, the assessment would not be reliable.

An authentic assessment challenges students to synthesize the knowledge and skills they have acquired to perform a task that closely resembles actual, real‐world situations in which those abilities are used. Authentic assessments measure student achievement in the most direct, relevant means possible, and they promote the integration of factual knowledge, higher level thinking, and relevant skills in a meaningful context. For example, students in a contemporary social issues course that seeks to prepare students to educate themselves about issues and make policy position judgments could be given an assessment that asks them to do exactly that.

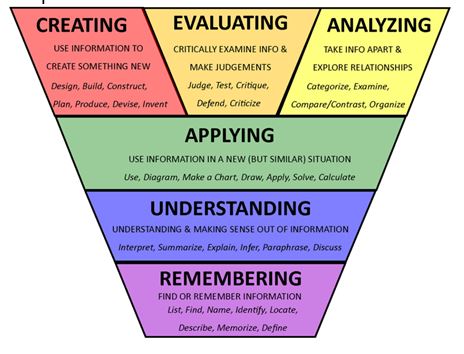

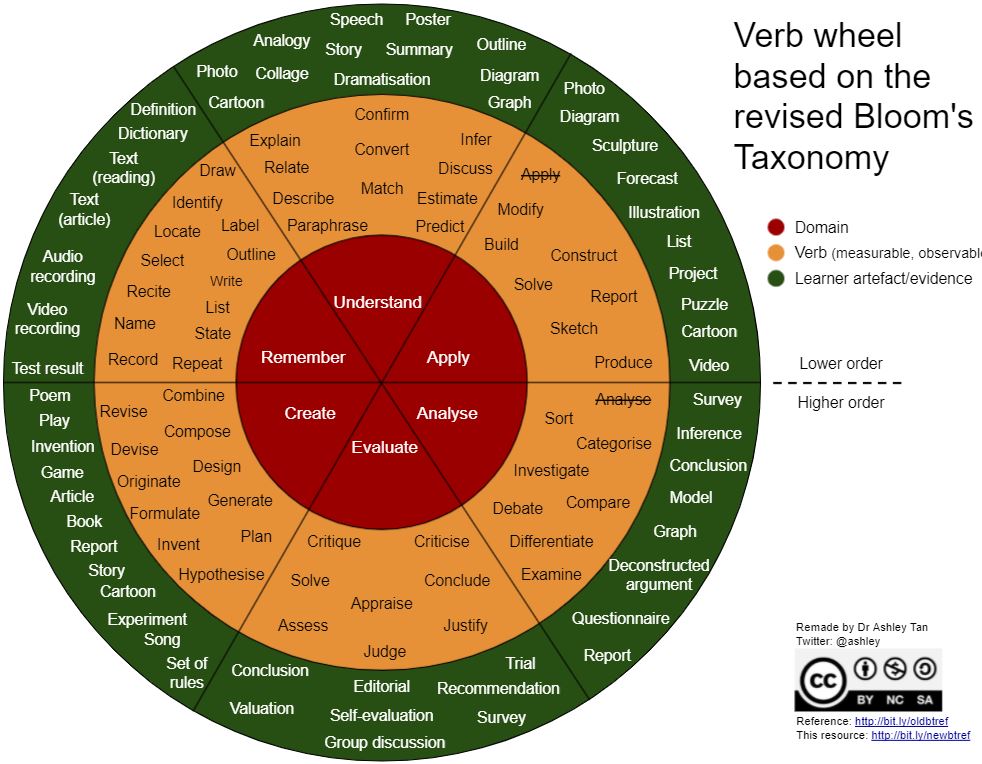

Creating Assignments Using Blooms Taxonomy

To create assignments begin with the learning outcomes. Learning outcomes determine the method for assessment. For example: the lowest level verb on Blooms Taxonomy is remembering and includes verbs such as list, find, describe and locate. An example of an assignment to assess a remembering learning outcome is an objective test with fill-in-the-blank, matching, or multiple-choice questions. These test items require students to recall facts or basic concepts.

Here are examples of assessments, learning outcomes and instructional strategies aligned to all levels Bloom's Taxonomy. Also see the graphic at the bottom of the page that illustrates Blooms Taxonomy Levels aligned to verbs and assignments.

Types of Assignments

Select the assignments below for resources to create meaningful, effective and equitable assignments aligned with outcomes.

Blooms Taxonomy Levels, Verbs and Assignments

This page is licensed under a Creative Commons Attribution-Noncommercial 4.0 International License.

Creating Assignments and Exams Assessment for Registered Faculty

If you are a registered Assessment Bootcamp faculty member, complete the Creating Assignments and Exams Assessment before proceeding to Creating Rubrics.

Assessment Bootcamp

The next lesson explains the value of using rubrics to assess student learning.